The Modern WWW, Or: Where Do We Want To Go From Here?

From the early days of ARPANET until the dawn of the World Wide Web (WWW), the internet was primarily the domain of researchers, teachers and students, with hobbyists running their own BBS servers you could dial into, yet not connected to the internet. Pitched in 1989 by Tim Berners-Lee while working at CERN, the WWW was intended as an information management system that’d provide standardized access to information using HTTP as the transfer protocol and HTML and later CSS to create formatted documents inspired by the SGML standard. Even better, it allowed for WWW forums and personal websites to begin to pop up, enabling the eternal joy of web rings, animated GIFs and forums on any conceivable topic.

During the early 90s, as the newly opened WWW began to gain traction with the public, the Mosaic browser formed the backbone of the WWW browsers (‘web browsers’) of the time, including Internet Explorer – which licensed the Mosaic code – and the Mosaic-based Netscape Navigator. With the WWW standards set by the – Berners-Lee-founded – World Wide Web Consortium (W3C), the stage appeared to be set for an open and fair playing field for all. What we got instead was the brawl referred to as the ‘browser wars‘, which – although changed – continues to this day.

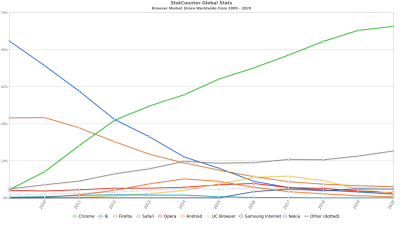

Today it isn’t Microsoft’s Internet Explorer that’s ruling the WWW while setting the course for new web standards, but instead we have Google’s Chrome browser partying like it’s the early 2000s and it’s wearing an IE mask. With former competitors like Opera and Microsoft having switched to the Chromium browser engine that underlies Chrome, what does this tell us about the chances for alternative browsers and the future of the WWW?

We’re Not In Geocities Any More

For those of us who were around to experience the 1990s WWW, this was definitely a wild time, with the ‘cyber highway’ being both hyped up and yet incredibly limited in its capabilities. Operating systems didn’t come with any kind of web browser until special editions of Windows 95 and the like began to include one. In the case of Windows this was of course Internet Explorer (3+), but if you were so inclined you could buy a CD with a Netscape, Opera or other browser and install that. Later, you could also download the free ‘Personal Edition’ of Netscape Navigator (later Communicator) or the ad-supported version of Opera, if you had a lot of dial-up minutes to burn through, or had a chance to leech off the school’s broadband link.

Once online in the 90s you were left with the dilemma of where to go and what to do. With no Google Search and only a handful of terrible search engines along with website portals to guide you, it was more about the joys of discovery. All too often you’d end up on a web ring and a host of Geocities or similar hobby sites, with the focus being primarily as Tim Berners-Lee had envisioned on sharing information. Formatting was basic and beyond some sites using fancy framesets and heavy use of images, things tended to Just Work©, until the late 90s when we got Dynamic HTML (DHTML), Visual Basic Script (VBS) and JavaScript (JS), along with Java Applets and Flash.

VBS was the surprising victim of JS, with the former being part of IE along with other Microsoft products long before JS got thrown together and pushed into production in less than a week of total design and implementation time just so that Netscape could have some scripting language to compete with. This was the era when Netscape was struggling to keep up with Microsoft, despite the latter otherwise having completely missed the boat on this newfangled ‘internet’ thing. One example of this was for example when Internet Explorer had implemented the HTML iframe feature, while Netscape 4.7x had not, leading to one of the first notable example of websites breaking that was not due to Java Applets.

As the 2000s rolled around, the dot-com bubble was on the verge of imploding, which left us with a number of survivors, including Google, Amazon and would soon swap Geocities and web rings for MySpace, FaceBook and kin. Meanwhile, the concept of web browsers as payware had fallen by the wayside, as some envisioned them as being targets for Open Source Software projects (e.g. Mozilla Organization), or as an integral part of being a WWW-based advertising company (Google with Chromium/Chrome).

As the 2000s rolled around, the dot-com bubble was on the verge of imploding, which left us with a number of survivors, including Google, Amazon and would soon swap Geocities and web rings for MySpace, FaceBook and kin. Meanwhile, the concept of web browsers as payware had fallen by the wayside, as some envisioned them as being targets for Open Source Software projects (e.g. Mozilla Organization), or as an integral part of being a WWW-based advertising company (Google with Chromium/Chrome).

What’s Your Time Worth

When it comes to the modern WWW, there are a few aspects to consider. The first is that of web browsers, as these form the required client software to access the WWW’s resources. Since the 1990s, the complexity of such software has skyrocketed. Rather than being simple HTML layout engines that accept CSS stylesheet to spruce things up, they now have to deal with complex HTML 5 elements like <canvas>, and CSS has morphed into a scripting language nearly as capable and complex as JavaScript.

JavaScript meanwhile has changed from the ‘dynamic’ part of DHTML into a Just-In-Time accelerated monstrosity just to keep up with the megabytes of JS frameworks that have to be parsed and run for a simple page load of the average website, or ‘web app’, as they are now more commonly called. Meanwhile there’s increasing more use of WebAssembly, which essentially adds a third language runtime to the application. The native APIs exposed to the JavaScript side are now expected to offer everything from graphics acceleration to access to microphones, webcams and serial ports.

Back in 2010 when I innocently embarked on the ‘simple’ task of adding H.264 decoding support to the Firefox 3.6.x source, this experience taught me more about the Netscape codebase than I had bargained for. Even if there had not been a nearly complete lack of documentation and a functioning build system, the sheer amount of code was such that the codebase was essentially unmaintainable, and that was thirteen years ago before new JavaScript, CSS and WebAssembly features got added to the mix. In the end I ended up implementing a basic browser using the QtWebkit module, but got blocked there when that module got discontinued and replaced with the far more limited Chromium-based module.

These days I mostly hang around the Pale Moon project, which has forked the Mozilla Gecko engine into the heavily customized Goanna engine. As noted by the project, although they threw out anything unnecessary from the Gecko engine, keeping up with the constantly added features with CSS and JS is nearly impossible. It ought to be clear at this point that writing a browser from scratch with a couple of buddies will never net you a commercial-grade product, hence why Microsoft threw in the towel with its EdgeHTML.

Today’s Sponsor

The second aspect to consider with the modern WWW is who determines the standards. In 2012 the internet was set ablaze when Google, Microsoft and Netflix sought to push through the Encrypted Media Extensions (EME) standard, which requires a proprietary, closed-source module with per-browser licensing. Although Mozilla sought to protest against this, ultimately they were forced to implement it regardless.

More recently, Google has sought to improve Chrome’s advertising targeting capabilities, with Federated Learning of Cohorts (FLoC) in 2021, which was marketed as a more friendly, interest-based form of advertising than tracking with cookies. After negative feedback from many sources, Google quietly dropped FLoC, but not before renaming it to Topics API and trying to ram it through again.

Although it’s easy to call Google ‘evil’ here and point out that they dropped the ‘do no evil’ tagline, it’s important to note the context here. When Microsoft was bundling Internet Explorer with Windows and enjoying a solid browser market share, it was doing so from the position as a software company, leading to it leveraging this advantage for features like ActiveX in corporate settings.

Meanwhile Google is primarily an advertising company which makes it reasonable for them to leverage their browser near-monopoly for their own benefit. Meanwhile Mozilla’s Firefox browser is scraping by with a <5% market share . Mozilla has also changed since the early 2000s from a non-profit to a for-profit model, and its revenue comes from search query royalties, donations and in-browser ads.

The somewhat depressing picture that this paints is that unless you restrict the scope of the browser as Pale Moon does (no DRM, no WebRTC, no WebVR, etc.), you are not going to keep up with core HTML, CSS and JS functionality without a large (paid) team of developers, meanwhile beholden to the Big RGB Gorilla in the room in the form of Google setting the course of new functionality, including the removal of support for image formats while adding its own.

Where From Here

Although taking Google on head-first would be foolishness worthy of Don Quixote, there are ways that we can change things for the better. One is to demand that websites we use and/or maintain follow either the Progressive Enhancement or Graceful Degradation design philosophy. The latter item is something that is integral with HTML and CSS designs, with the absence of, or error in any of the CSS files merely leading to the HTML document being displayed without formatting, yet with the text and images visible and any URLs usable.

Progressive enhancement is similar, but more of a bottom-up approach, where the base design targets a minimum set of features, usually just HTML and CSS, with the availability of JavaScript support in the browser enhancing the experience, without affecting the core functionality for anyone entering the site with JS disabled (via NoScript and/or blocker like µMatrix). As a bonus, doing this is great for accessibility (like screenreaders) and for search-engine-optimization as all text will be clearly readable for crawler bot which tend to not use JS.

Perhaps using methods like these we as users of the WWW can give some hint to Google and kin as to what we’d like things to be like. Considering the trend of limiting the modern web to only browsers with only the latest, bleeding-edge features and the correct User-Agent string (with Discourse as a major offender), it would seem that such grassroots efforts may be the most effective in the long run, along with ensuring that alternative, non-Chromium browsers are not faced with extinction.

from Blog – Hackaday https://ift.tt/SrnviJ4

Comments

Post a Comment