Hard Disk Drives Have Made Precision Engineering Commonplace

Modern-day hard disk drives (HDDs) hold the interesting juxtaposition of being simultaneously the pinnacle of mass-produced, high-precision mechanical engineering, as well as the most scorned storage technology. Despite being called derogatory names such as ‘spinning rust’, most of these drives manage a lifetime of spinning ultra-smooth magnetic storage platters only micrometers removed from the recording and reading heads whose read arms are twitching around using actuators that manage to position the head precisely above the correct microscopic magnetic trace within milliseconds.

Despite decade after decade of more and more of these magnetic traces being crammed on a single square millimeter of these platters, and the simple read and write heads being replaced every few years by more and more complicated ones, hard drive reliability has gone up. The second quarter report from storage company Backblaze on their HDDs shows that the annual failure rate has gone significantly down compared to last year.

The question is whether this means that HDDs stand to become only more reliable over time, and how upcoming technologies like MAMR and HAMR may affect these metrics over the coming decades.

From Mega to Tera

The first HDDs were sold in the 1950s, with IBM’s IBM 350 storing a total of 3.75 MB on fifty 24″ (610 mm) discs, inside a cabinet measuring 152x172x74 cm. Fast-forward to today, and a top-of-the-line HDD in 3.5″ form factor (~14.7×10.2×2.6 cm) can store up to around 18 TB with conventional (non-shingled) recording.

Whereas the IBM 350 spun its platters at 1,200 RPM, HDDs for the past decades have focused on reducing the size of the platters, increasing the spindle speed (5,400 – 15,000 RPM). Other improvements have focused on moving the read and write heads closer to the platter surface.

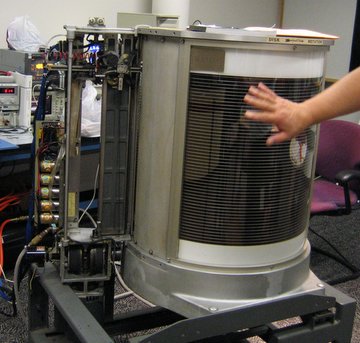

The IBM 1301 DSU (Disk Storage Unit) from 1961 was a major innovation in that it used a separate arm with read and write heads for each platter. It also innovated by using aerodynamic forces to let the arms fly over the platter surface on a cushion of air, enabling a far smaller distance between heads and platter surface.

After 46 years of development IBM sold its HDD business to Hitachi in 2003. By that time, storage capacity had increased by 48,000 times in a much smaller volume. Like 29,161 times smaller. Power usage had dropped from over 2.3 kW to around 10 W (for desktop models), while price per megabyte had dropped from $68,000 USD to $0.002. At the same time the number of platters shrunk from dozens to only a couple at most.

Storing More in Less Space

Miniaturization has always been the name of the game, whether it was about mechanical constructs, electronics or computer technology. The hulking, vacuum tube or relay-powered computer monsters of the 1940s and 1950s morphed into less hulking transistor-powered computer systems before turning into today’s sleek, ASIC-powered marvels. At the same time, HDD storage technology underwent a similar change.

The control electronics for HDDs experienced all of the benefits of increased use of VLSI circuitry, along with increasingly more precise and low-power servo technology. As improvements from materials science enabled lighter, smoother (glass or aluminium) platters with improved magnetic coatings, aerial density kept shooting up. With the properties of all the individual components (ASIC packaging, solder alloys, actuators, aerodynamics of HDD arms, etc.) better understood, major revolutions turned into incremental improvements.

As happened in other fields, the physical limits on write speeds and random access times would eventually mean that HDDs are most useful where large amounts of storage for little money and high durability are essential. This allowed the HDD market to optimize for desktop and server systems, as well as surveillance and backup (competing with tape).

Understanding HDD Failures

Although the mechanical parts of an HDD are often considered the weakest spot, there are a number of possible causes, including:

- Human error.

- Hardware failure (mechanical, electronics).

- Firmware corruption.

- Environmental (heat, moisture).

- Power.

HDDs are given an impact rating while powered down or when in operation (platters spinning and heads not parked). If these ratings are exceeded, damage to the actuators that move the arms, or a crash of the heads onto the platter surface can occur. If these tolerances are not exceeded, then normal wear is most likely to be the primary cause of failure, which is specified by the manufacturer’s MTBF (Mean Time Between Failures) number.

This MTBF number is derived by extrapolating from the observed wear after a certain time period, as is industry standard. With the MTBF for HDDs generally given as between 100,000 and 1 million hours, to test this entire period would require the drive to be active between 10 to 100 years. This number thus assumes the drive operating under the recommended operating conditions, as happens at a storage company like Backblaze.

Obviously, exposing a HDD to extreme shock (e.g. dropping it on a concrete floor) or extreme power events (power surge, ESD, etc.) will limit their lifespan. Less obvious are manufacturing flaws, which can occur with any product, and is the reason why there is an ‘acceptable failure rate’ for most products.

It’s Not You, It Was the Manufacturing Line

Despite the great MTBF numbers for HDDs and the obvious efforts by Backblaze to keep each of their nearly 130,000 drives happily spinning along until being retired to HDD Heaven (usually in the form of a mechanical shredder), they reported an annualized failure rate (AFR) of 1.07% for the first quarter of 2020. Happily, this is the lowest failure rate for them since they began to publish these reports in 2013. The Q1 2019 AFR was 1.56%, for example.

Despite the great MTBF numbers for HDDs and the obvious efforts by Backblaze to keep each of their nearly 130,000 drives happily spinning along until being retired to HDD Heaven (usually in the form of a mechanical shredder), they reported an annualized failure rate (AFR) of 1.07% for the first quarter of 2020. Happily, this is the lowest failure rate for them since they began to publish these reports in 2013. The Q1 2019 AFR was 1.56%, for example.

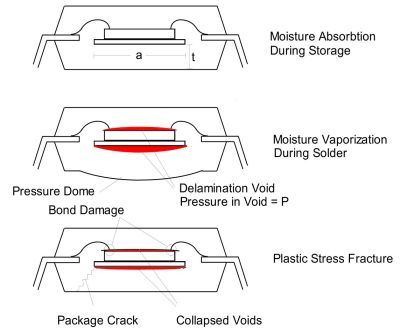

As we have covered previously, during the manufacturing and handling of integrated circuits (ICs), flaws can be introduced that only become apparent later during the product’s lifespan. Over time, issues like electromigration, thermal stress and mechanical stress can cause failures in a circuit, from bond wires inside the IC packaging snapping off, electromigration destroying solder joints as well as circuits inside IC packaging (especially after ESD events).

The mechanical elements of an HDD depend on precise manufacturing tolerances, as well as proper lubrication. In the past, stuck heads (‘stiction‘) could be an issue, whereby the properties of the lubricant changed over time to the point where the arms could no longer move out of their parked position. Improved lubrication types have more or less solved this issue by now.

Yet, every step in a manufacturing process has a certain chance to introduce flaws, which ultimately add up to something that could spoil the nice, shiny MTBF number, instead making the product part of the wrong side of the ‘bathtub curve’ for failure rates. This curve is characterized by an early spike in product failures, due to serious manufacturing defects, with defects decreasing after that until the end of the MTBF lifespan approaches.

Looking Ahead

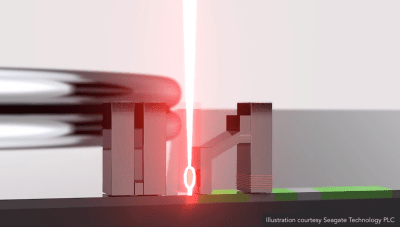

HDDs as we know them today are indicative of a mature manufacturing process, with many of the old issues that plagued them over the past half decade fixed or mitigated. Relatively major changes, such as the shift to helium-filled drives, have not had a noticeable performance on failure rates so far. Other changes, such as the shift from Perpendicular recording (PMR, or CMR) to Heat-Assisted Magnetic Recording (HAMR) should not have a noticeable effect on HDD longevity, barring any issues with the new technology itself.

Basically, HDD technology’s future appears to be boring in all the right ways for anyone who likes to have a lot of storage capacity for little money that should last for at least a solid decade. The basic principle behind HDDs, namely that of storing magnetic orientations on a platter, happens to one that could essentially be taken down to singular molecules. With additions like HAMR, the long-term stability of these magnetic orientations should be improved significantly as well.

This is a massive benefit over NAND Flash, which instead uses small capacitors to store charges, and uses a write method that physically damages these capacitors. The physical limits here are much more severe, which has led to ever more complicated constructs, such as quad-level (QLC) Flash, which has to differentiate between 16 possible voltage states in each cell. This complexity has led to QLC-based storage drives being barely faster than a 5,400 RPM HDD in many scenarios, especially when it comes to latency.

Spinning Down

The first HDD which I used in a system of my own was probably a 20 or 30 MB Seagate one in the IBM PS/2 (386SX) which my father gave to me after his work had switched over to new PCs and probably wanted to free up some space in their storage area. Back in the MS DOS days this was sufficient for DOS, a stack of games, WordPerfect 5.1 and much more. By the end of the 90s, this was of course a laughable amount, and we were then talking about gigabytes, not megabytes when it came to HDDs.

Despite having gone through many PCs and laptops since then, I have ironically only had an SSD outright give up and die on me. This, along with the data from the industry — such as these Backblaze reports — make me feel pretty confident that the last HDD won’t spin down yet for a while. Maybe when something like 3D XPoint memory becomes affordable and large enough might this change.

Until then, keep spinning.

from Blog – Hackaday https://ift.tt/3kGsFYD

Comments

Post a Comment